Our Tips for Running a Remote Hackathon

Simon · April 6, 2021As a fully remote company, we have always looked for ways to keep people working together. One thing that works well for us is to run remote hackathons, where our engineers get together to work on something unusual. These have been successful enough that we have made them a quarterly event.

We have posted previously about our process on our first blog post about how we got this started and our one in October 2020. We’ve refined our process over this time, and I’d like to share some advice on what we’ve learned from running them successfully over a year and a half.

Planning

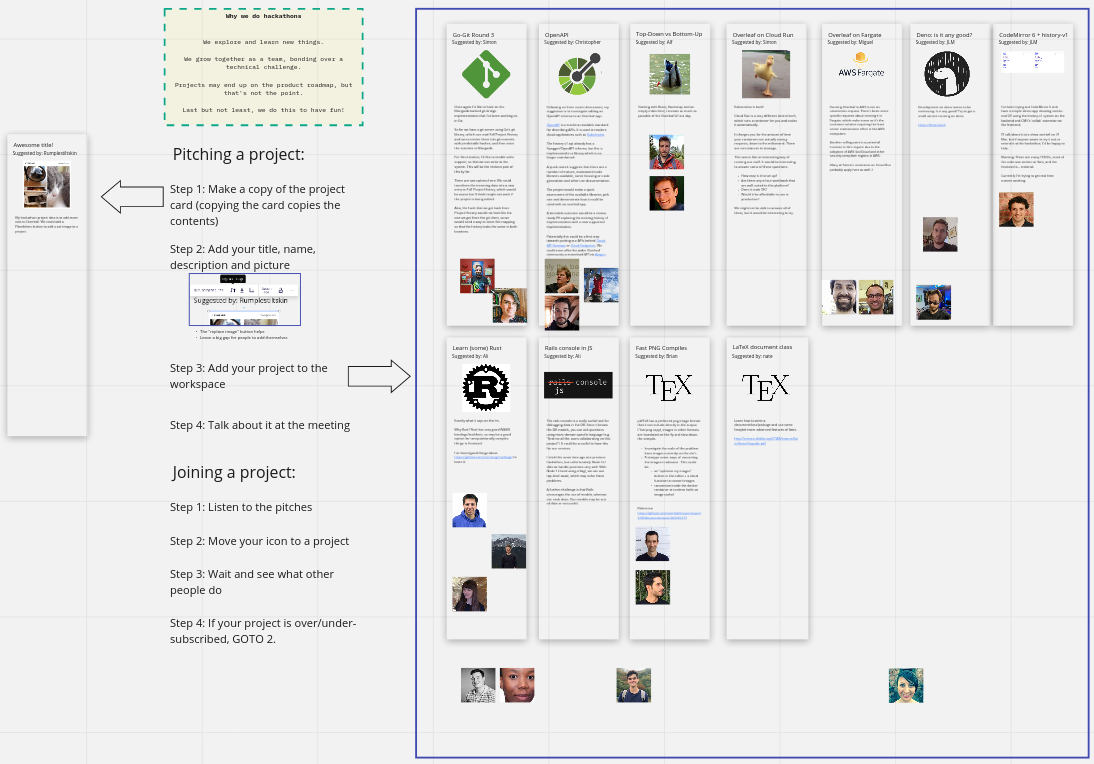

We use a Miro board for planning, which works well. We use Miro a lot, so it’s a tool with which our engineers are familiar. When we started, people would write up their ideas on post-it notes, post them on the board, and then have a pitch session where people would vote on which projects should go ahead.

Since our first session, we have learned that it’s better to write more fleshed-out proposals so that people can read them in advance. Doing so helps people make decisions during the pitch session and helps give people other project ideas.

One problem with the voting mechanism was that people had three votes to use. We could end up with projects receiving votes as a second preference that nobody wanted to do. We have since switched to a system where people move their avatar to their preferred project and then move it to a different place if there are too many/few people in a team. This system lets us start the day knowing who is going to be working on what.

Running the Day

Although we usually use Slack for most of our work-based communication, we’ve used Discord for hackathons. It provides a couple of advantages:

- You can set up permanent meeting rooms so that people can drop in and out or just keep a connection open.

- It seems to put less of a strain on your system when screen sharing

- You can have multiple people sharing their screen simultaneously, which helps collaborate on different parts of the stack simultaneously. For example: when splitting between frontend and backend.

Presenting

The format of this meeting hasn’t changed much since we started. The teams show their discoveries in a whole-company presentation. We’ve learned that we need to be stricter in timekeeping during these meetings, as there are sometimes quite a few questions.

Setting Expectations

We’ve found that it helps to be clear about what the expected outcomes are for the projects in advance. It can be disappointing to work on something expecting it to end up in the product, only to find that it doesn’t get picked up.

It’s essential to be clear that hackathons are for learning, picking up new skills, or seeing if an idea works in a proof of concept. It’s OK for the project to fail because we still learn valuable information along the way.

Projects From Our Latest Event

We ran a hackathon at the end of March. These are some of the projects we worked on.

Go-Git (Round 3)

We’ve been looking at using the go-git library to see if we could create an improved Git Bridge implementation. We are still a long way from this being possible, but we have learned some valuable things.

We have revisited this project twice before. The first time, we managed to get a barebones implementation of the git-over-ssh protocol working, using Overleaf to manage a user’s public keys. On our second iteration, we looked at converting Overleaf Project History into Git Commits with reproducible hashes.

We looked at receiving commits within the git server, converting them to Operational Transformation updates, and pushing them into the Overleaf history service. We got a proof of concept working when making updates to existing text files.

Looking into OpenAPI

We designed some existing Overleaf services using an OpenAPI v2 (also known as Swagger) specification, which defines a language-agnostic and machine-readable interface for RESTful APIs. Development on a library we used (swagger-tools) has halted, prompting us to explore alternatives. At the end of the Hackathon, we had upgraded an existing service to an OpenAPI v3 specification and replaced the swagger-tools library with oas-tools.

Alongside this, we explored integrating Express OpenAPI into a different service that did not have an API specification as such. We utilised this middleware to decorate existing code with additional information and schemas. The library then uses the extra context to validate incoming requests and produce a full OpenAPI specification - optionally exposing handlers for ReDoc/SwaggerUI.

Top-Down vs Bottom-Up

This project was an ambitious attempt to rebuild as much as possible of the Overleaf UI using React in one day, with a bit of experimentation along the way.

We learned some valuable lessons and discovered a new approach to developing a frontend user interface alongside a mocked backend API, using Mock Service Worker and PouchDB.

Running Server-Pro on AWS Fargate

While we currently run most of our infrastructure on Google Cloud Platform, several reasons make exploring setting up Overleaf in different cloud environments worth a look. I’ll highlight 3 of them:

- To understand some of our OSS users trying to set up Overleaf Community Edition in AWS and other alternative environments.

- To keep an eye on the different offerings of other cloud providers to prevent vendor lock-in.

- It’s fun to try out new toys!

AWS Fargate is a managed service that runs containers without the complexity of Kubernetes, which is the framework we use to run all of our microservices on overleaf.com.

We conducted several tests throughout the day. The main takeaway is that Fargate would be most suitable for bridging the gap between our SaaS architecture and the monolithic architecture we use for Overleaf CE and Server Pro. We discovered that running a Kubernetes-like stack would be a more natural way to migrate our services if we migrated overleaf.com from Google Cloud Platform to AWS.

Running a container-based infrastructure has plenty of benefits but is challenging. ECS and Fargate could offer a good layer of abstraction to run Server Pro in a hybrid environment.

However, we did run into several technical challenges during the day that meant we struggled to get this working in the allocated time. Most notably, we manage compiles within their own Docker containers, which the main app can’t control when running on Fargate.

Trying out Deno

We took the day to explore what Deno currently has to offer as a NodeJS alternative. We first liked that Deno provides complete control over what the app has access to in a very granular way. For example, you can control its access to the file system, network access, and so on.

Also, TypeScript is a first-class citizen in Deno and is supported right out of the box with no required configuration. However, Deno’s module ecosystem is still very young. While a few popular NodeJS libraries are available, the choice is still pretty scarce.

It was still possible to quickly create a fully working REST API with Alosaur and TypeORM, leveraging the power of TypeScript’s annotations.

The conclusion seemed to be that while Deno is not suited for a production application yet, it can be a powerful prototyping tool for TypeScript applications.

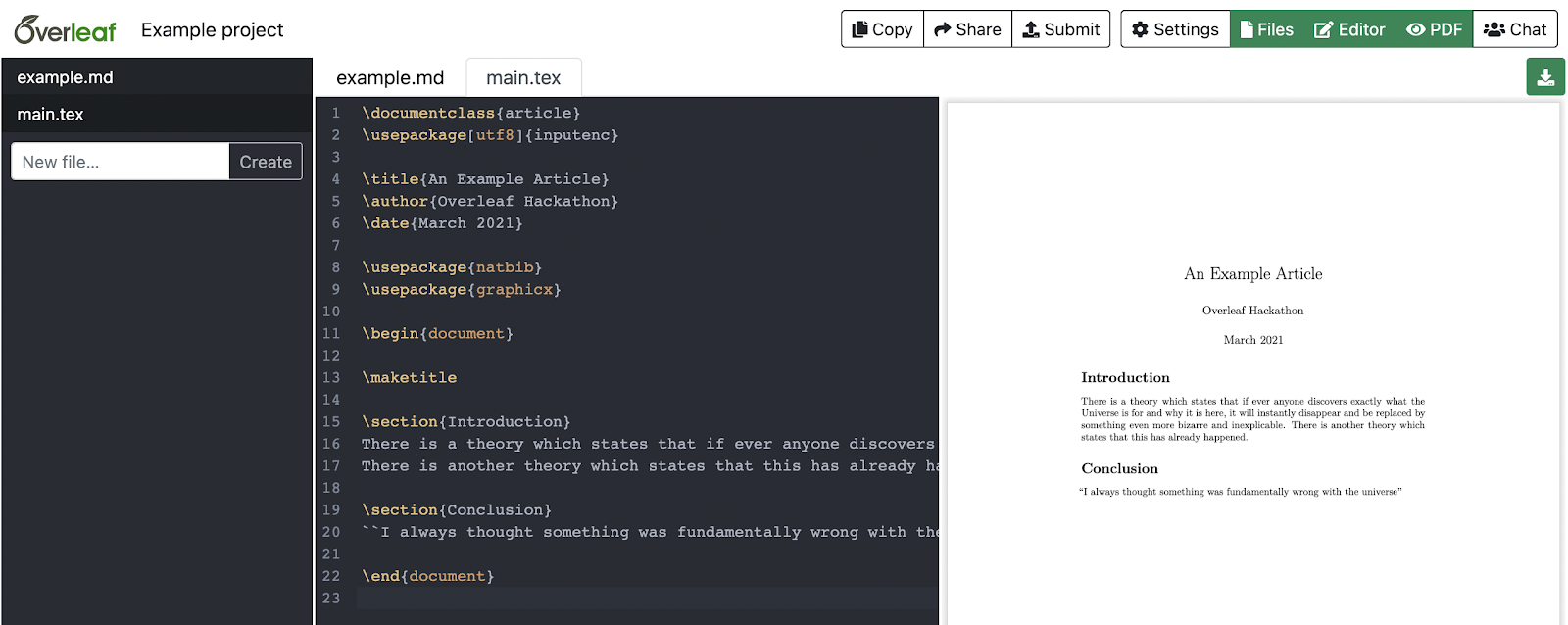

Collaborative Editing with Codemirror 6

Overleaf is sponsoring the new version of the CodeMirror editor, CodeMirror 6, which promises better accessibility and mobile / tablet support. We did some learning about CM6’s new APIs, and we built a demo of collaborative editing in CM6. We are still a long way from bringing it up to parity with the current editor, but it looks very promising!

Speeding up Compiles with Fast PNG Copy

We wanted to try replacing images in users' projects with optimised versions to make their compiles faster. TeX can spend a long time converting images from the original format into one embeddable in the PDF. It does this on every compile, which is wasteful.

We modified our compilation environment to convert and cache the images up-front -- on a project with 60MB of PNGs containing a colour profile, which prevents TeX from embedding the image directly. We were able to reduce the compile-time from 34s to 6s.